m

Size: a a a

2020 April 06

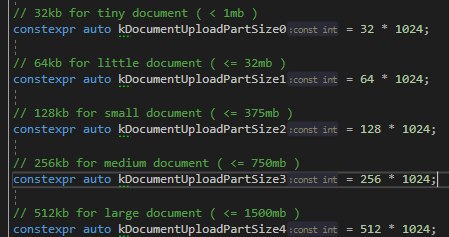

на аплоад поставил 512кб блоки для всех размеров

m

ну и количество сессий с 2х до 8

m

судя по вот такому и комментам - его релизить торопили

m

и он еще скорей всего допилит в будущем

m

поэтому не вижу пока смысла двойную работу делать

m

https://github.com/telegramdesktop/tdesktop/pull/6442#issuecomment-529764829

It is not that simple :( On fast networks such constant changes will improve the speed, but on slow networks it will cause over loaded connections which will lead to constant reconnecting by timeouts and decrease in speed / sometimes not loading at all.

Well, so it can be to regulate the number of concurrent loading threads depending on the file size and network speed?

I experimented with my python microservice - I managed to achieve a stable download speed of ~ 240Mbit / s for one worker (process). Depending on the size of the downloaded file, from 4 to 24 threads are allocated for downloading the file — for small files, the cost of creating a large number of threads, switching contexts and locks eliminates the gain from multithreading. The worker always has at least 2 threads ready - so as not to waste resources on creating and deleting threads when loading several small files.

Depending on the file size, additional threads are allocated and live until the end of the file download process. Threads start with a randomized delay between 200ms and 2000ms. List of chunks to load is composed at start time for each thread. Lists are independently processed by each thread with minimal mutual blocking.

A desktop client has a problem when scrolling through gifs, for example - they are placed in the queue and processed according to the FIFO strategy, when it would be more appropriate to use LIFO - first uploading files that are in the user's field of view.

It is not that simple :( On fast networks such constant changes will improve the speed, but on slow networks it will cause over loaded connections which will lead to constant reconnecting by timeouts and decrease in speed / sometimes not loading at all.

Well, so it can be to regulate the number of concurrent loading threads depending on the file size and network speed?

I experimented with my python microservice - I managed to achieve a stable download speed of ~ 240Mbit / s for one worker (process). Depending on the size of the downloaded file, from 4 to 24 threads are allocated for downloading the file — for small files, the cost of creating a large number of threads, switching contexts and locks eliminates the gain from multithreading. The worker always has at least 2 threads ready - so as not to waste resources on creating and deleting threads when loading several small files.

Depending on the file size, additional threads are allocated and live until the end of the file download process. Threads start with a randomized delay between 200ms and 2000ms. List of chunks to load is composed at start time for each thread. Lists are independently processed by each thread with minimal mutual blocking.

A desktop client has a problem when scrolling through gifs, for example - they are placed in the queue and processed according to the FIFO strategy, when it would be more appropriate to use LIFO - first uploading files that are in the user's field of view.

учитывая что он примерно как тут описано и сделал

m

апдейт тут на телегу официальную

m

со след релизом обновится на официальную само

k

То есть с какого-то хера скорость из-за времени падает и он перезапускает загрузку, так что ли

Из английского чо я понял

Из английского чо я понял

m

То есть с какого-то хера скорость из-за времени падает и он перезапускает загрузку, так что ли

Из английского чо я понял

Из английского чо я понял

нет)

k

Да бля

m

ну это в двух словах не объяснить

m

ну короче раньше он создавал сессии загрузки фиксированные

k

Ну тип он установил, что можно регулировать чо-то там в зависимости в потоках в зависимости от размера файла

m

и они грузили

для больших файлов много потоков дает выигрыш

для больших файлов много потоков дает выигрыш

m

но для маленьких - наоборот замедлить может

m

я предложил потоки динамически спаунить в зависимости от размера файла

ну он так и сделал

ну он так и сделал

k

А

А шо тут сложного. Я уже испугался

А шо тут сложного. Я уже испугался

m

сложность в деталях)

почему ускоряется чет почему замедляется

скок потоков надо

размер блока какой

как их синхронизировать и тд

почему ускоряется чет почему замедляется

скок потоков надо

размер блока какой

как их синхронизировать и тд

k

Долистался стикеров