Любой, кто имел дело с тем, что наворочено в облаке типичного интернет-сервиса, гарантированно получит полчаса здорового смеха.

When $FAMOUS_COMPANY launched in 2010, it ran on a single server in $TECHBRO_FOUNDER’s garage. Since then, we’ve experienced explosive VC-funded growth and today we have hundreds of millions of daily active users (DAUs) from all around the globe accessing our products from our mobile apps and on $

famouscompany.com. We’ve since made a couple of panic-induced changes to our backend to manage our technical debt (usually right after a high-profile outage) to keep our servers from keeling over. Our existing technology stack has served us well for all these years, but as we seek to grow further it’s clear that a complete rewrite of our application is something which will somehow prevent us from losing two billion dollars a year on customer acquisition.

Why switch?

As we’ve mentioned in previous blog posts, the $FAMOUS_COMPANY backend has historically been developed in $UNREMARKABLE_LANGUAGE and architected on top of $PRACTICAL_OPEN_SOURCE_FRAMEWORK. To suit our unique needs, we designed and open-sourced $AN_ENGINEER_TOOK_A_MYTHOLOGY_CLASS, a highly-available, just-in-time compiler for $UNREMARKABLE_LANGUAGE. Even with our custom runtime, however, we eventually began seeing sporadic spikes in our 99th percentile latency statistics, which grew ever more pronounced as we scaled up to handle our increasing DAU count. Luckily, all of our software is designed from the ground up for introspectability, and using some BPF scripts we copied from Brendan Gregg’s website our in-house profiling tools $FAMOUS_COMPANY engineers determined that the performance bottlenecks were a result of time spent in the garbage collector.

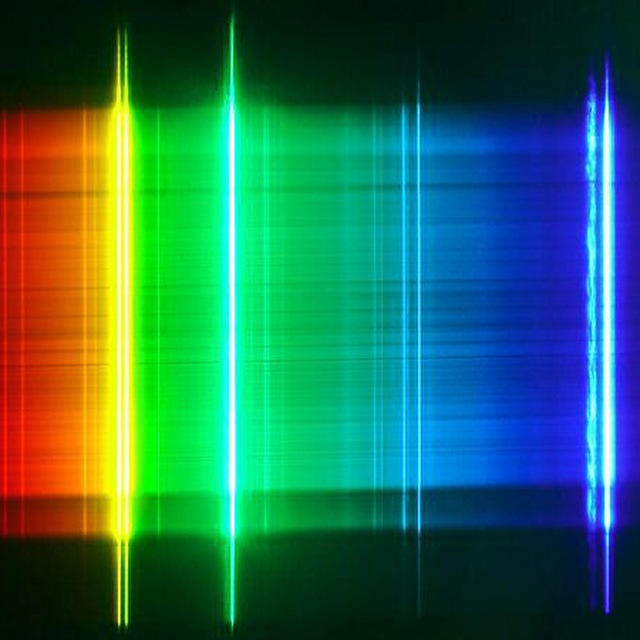

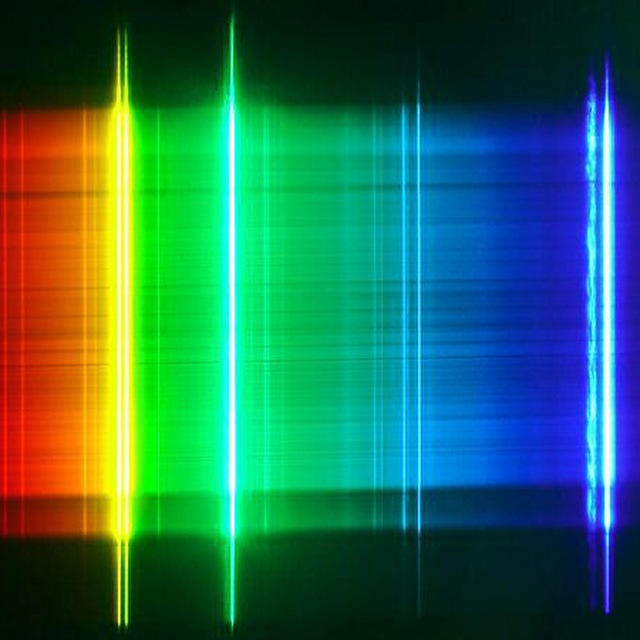

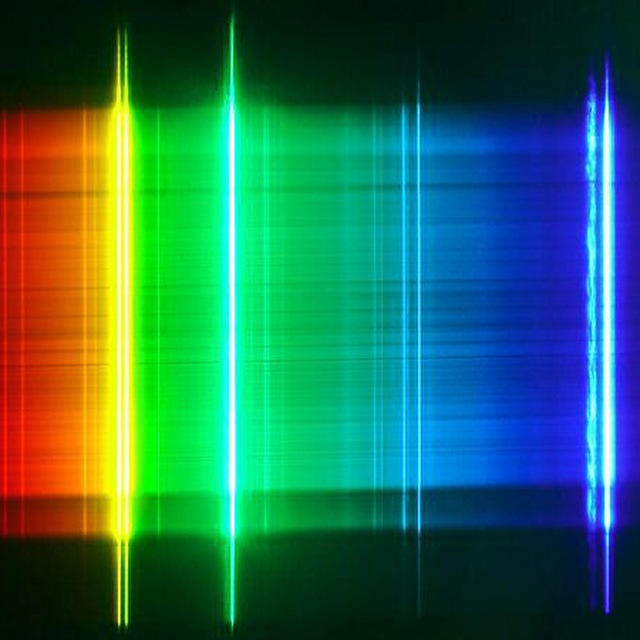

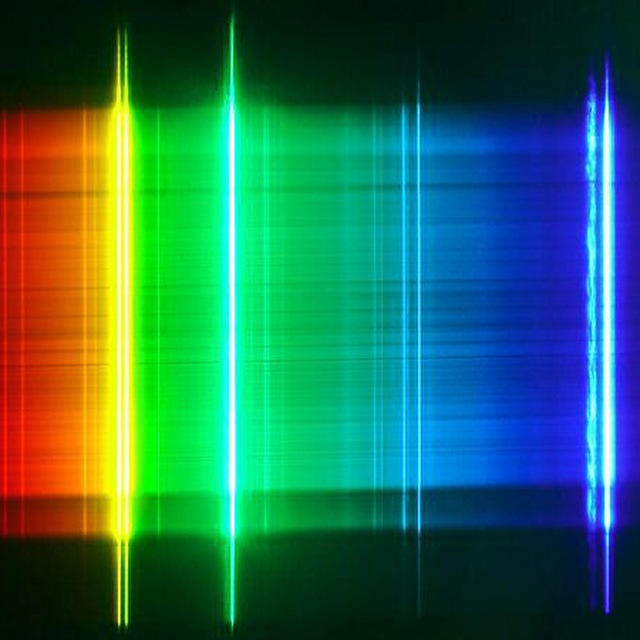

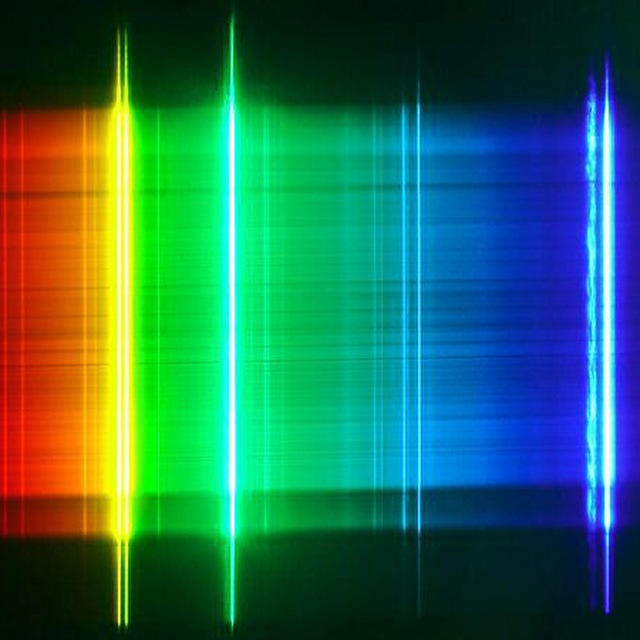

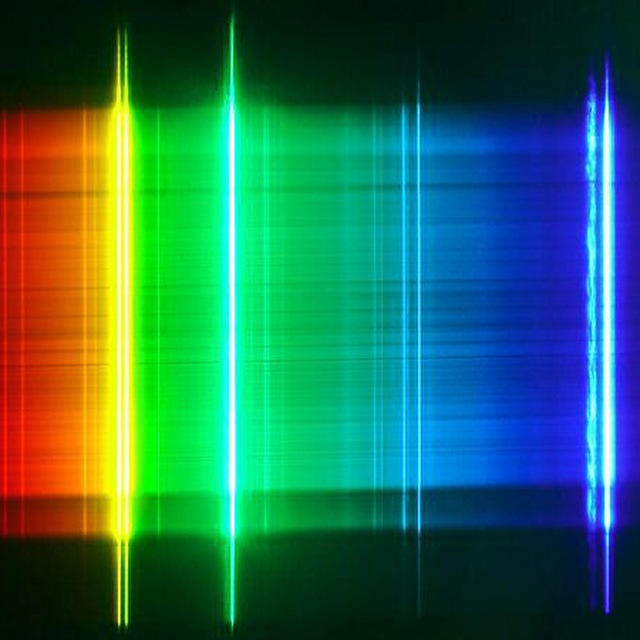

Initially, we tried messing with some garbage collector parameters we didn’t really understand, but to our surprise that didn’t magically solve our problems so instead we disabled garbage collection altogether. This increased our memory usage, but our automatic on-demand scaler handled this for us, as the graph below shows:

Ultimately, however, our decision to switch was driven by our difficulty in hiring new talent for $UNREMARKABLE_LANGUAGE, despite it being taught in dozens of universities across the United States. Our blog posts on $PRACTICAL_OPEN_SOURCE_FRAMEWORK seemed to get fewer upvotes when posted on Reddit as well, cementing our conviction that our technology stack was now legacy code.

Pivoting to a new stack

We knew we needed to find something that could keep up with us at $FAMOUS_COMPANY scale. We evaluated a number of promising alternatives that we selected and ranked based on the how many bullet points they had on their websites, how often they’d appear on the front page of Hacker News, and a spreadsheet of important language characteristics (performance, efficiency, community, ease-of-use) that we had people in the office fill out.

After careful consideration, we settled on rearchitecting our platform to use $FLASHY_LANGUAGE and $HYPED_TECHNOLOGY. Not only is $FLASHY_LANGUAGE popular according to the Stack Overflow developer survey, it’s also cross platform; we’re using it to reimplement our mobile apps as well. Rewriting our core infrastructure was fairly straightforward: as we have more engineers than we could possibly ever need or even know what to do with, we simply put a freeze on handling bug reports and shifted our effort to $HYPED_TECHNOLOGY instead.

https://saagarjha.com/blog/2020/05/10/why-we-at-famous-company-switched-to-hyped-technology/