П

Size: a a a

2019 February 28

@cydoroga когда будет видео?

c

Видео по прошедшему и по предыдущему выступлениям выложу на выходных

2019 March 04

AK

Видео по прошедшему и по предыдущему выступлениям выложу на выходных

up!

2019 March 06

c

Всем привет!

Сорри за долгую загрузку - импорт с видеокамеры занял времени дольше ожидаемого.

Видео выступлений по DRL from Human Preferences и Go-Explore доступны на странице канала:

https://www.youtube.com/channel/UC6KYPBaACVG0pkBWH5bkWLQ?view_as=subscriber

Как там... подписывайтесь, ставьте лайки, делитесь с друзьями!

PS информация по выступлению в этот четверг появится завтра днем.

Сорри за долгую загрузку - импорт с видеокамеры занял времени дольше ожидаемого.

Видео выступлений по DRL from Human Preferences и Go-Explore доступны на странице канала:

https://www.youtube.com/channel/UC6KYPBaACVG0pkBWH5bkWLQ?view_as=subscriber

Как там... подписывайтесь, ставьте лайки, делитесь с друзьями!

PS информация по выступлению в этот четверг появится завтра днем.

c

Всем привет!

На этой неделе на семинаре выступит Алексей Бойко, аспирант Сколтеха с рассказом о том, как можно использовать Tensor Train разложение для решения уравнения Беллмана.

Четверг, ШАД

Аудитория: Стенфорд

19:00

Approximate Dynamic Programming with Tensor Train Decomposition

————————————————————————————————-

Reinforcement Learning has emerged as a way of solving Bellman Equation by means of fitting generic function approximators by statistical sampling approach.

It got a lot of attention, partially due to its ability to cope with Curse of Dimensionality of Bellman Equation.

However, recently some other mathematical approaches have appeared do deal with high-dimensional data. One of the most prominent of those is Tensor Train.

Tensor Train may be seen as SVD-alike adaptive lossy compression algorithm, which allows to perform main mathematical operation on data without uncompressing it. It may provide up to logarithmic win in memory and time complexity (N -> logN), and have beaten a bunch of area-specific state of the art methods of solving high-dimensional partial differential equations in physics and quantum chemistry .

A paper on RSS (A*-conference in Robotics) have shown, that Bellman Equation arising in continious stochastic control problems may be also subject to the magic TT conpression, allowing to perform stadard Value and Policy Iteration algorithms on CPU on a up to 12-dimensional problem with the vector state up to the size of 10^24 elements, in a time of a few hours. Inference is also may be done without GPUs on a robot device, such as RPi 3+ or Intel NUC.

Ссылки:

https://www.researchgate.net/publication/281275027_Efficient_High-Dimensional_Stochastic_Optimal_Motion_Control_using_Tensor-Train_Decomposition

https://www.researchgate.net/publication/220412263_Tensor-Train_Decomposition

На этой неделе на семинаре выступит Алексей Бойко, аспирант Сколтеха с рассказом о том, как можно использовать Tensor Train разложение для решения уравнения Беллмана.

Четверг, ШАД

Аудитория: Стенфорд

19:00

Approximate Dynamic Programming with Tensor Train Decomposition

————————————————————————————————-

Reinforcement Learning has emerged as a way of solving Bellman Equation by means of fitting generic function approximators by statistical sampling approach.

It got a lot of attention, partially due to its ability to cope with Curse of Dimensionality of Bellman Equation.

However, recently some other mathematical approaches have appeared do deal with high-dimensional data. One of the most prominent of those is Tensor Train.

Tensor Train may be seen as SVD-alike adaptive lossy compression algorithm, which allows to perform main mathematical operation on data without uncompressing it. It may provide up to logarithmic win in memory and time complexity (N -> logN), and have beaten a bunch of area-specific state of the art methods of solving high-dimensional partial differential equations in physics and quantum chemistry .

A paper on RSS (A*-conference in Robotics) have shown, that Bellman Equation arising in continious stochastic control problems may be also subject to the magic TT conpression, allowing to perform stadard Value and Policy Iteration algorithms on CPU on a up to 12-dimensional problem with the vector state up to the size of 10^24 elements, in a time of a few hours. Inference is also may be done without GPUs on a robot device, such as RPi 3+ or Intel NUC.

Ссылки:

https://www.researchgate.net/publication/281275027_Efficient_High-Dimensional_Stochastic_Optimal_Motion_Control_using_Tensor-Train_Decomposition

https://www.researchgate.net/publication/220412263_Tensor-Train_Decomposition

c

Статья:

c

c

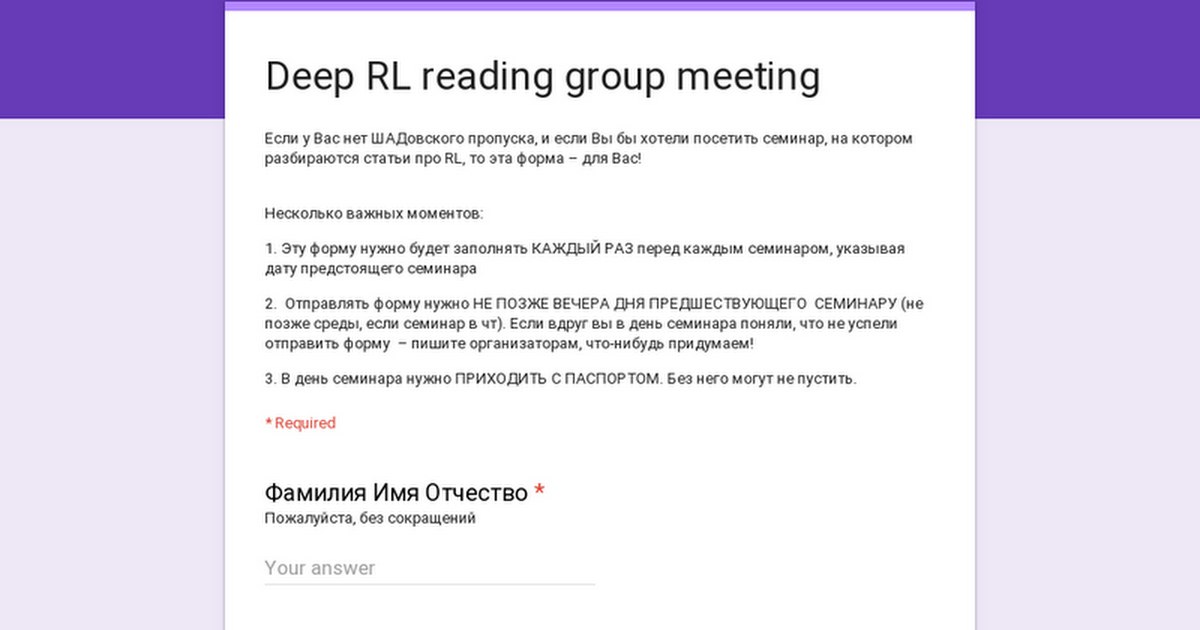

Переслано от cydoroga

Если у вас нет пропуска в ШАД, не забудьте зарегистрироваться по ссылке до 10ти часов утра четверга:

https://docs.google.com/forms/d/e/1FAIpQLSd18PGkZuOqkWThJhmNxnmiSVFicnH4YwLVTCOkEkVQV6ZIDg/viewform

https://docs.google.com/forms/d/e/1FAIpQLSd18PGkZuOqkWThJhmNxnmiSVFicnH4YwLVTCOkEkVQV6ZIDg/viewform

2019 March 07

NP

Привет!

А сегодня семинар будет?

А сегодня семинар будет?

c

Будет!

Но с небольшой задержкой

У нас проблемы на пропускной

Но с небольшой задержкой

У нас проблемы на пропускной

NP

понял, спасибо

c

На пропускной сегодня не очень хотят пропускать: если кто-то не может войти, пишите мне - решим

2019 March 09

c

Всем привет!

В этот четверг прошёл очень крутой, на мой взгляд, семинар от Алексея Бойко.

С довольно радикальным, для RL сообщества, взглядом на решение задачи обучения агентов с использованием ТТ разложения

И с собственной имплементацией, что круто!

Запись семинара доступна на канале группы:

https://youtu.be/mVsZlHnZGWk

ПС открываю мини-конкурс на аватарку для канала и на фоновую картинку канала

Присылайте предложения мне в личку. Если выбор будет сложным, потом запущу голосовалку.

Скрин того, где это будет отображено на канале, прилагаю

В этот четверг прошёл очень крутой, на мой взгляд, семинар от Алексея Бойко.

С довольно радикальным, для RL сообщества, взглядом на решение задачи обучения агентов с использованием ТТ разложения

И с собственной имплементацией, что круто!

Запись семинара доступна на канале группы:

https://youtu.be/mVsZlHnZGWk

ПС открываю мини-конкурс на аватарку для канала и на фоновую картинку канала

Присылайте предложения мне в личку. Если выбор будет сложным, потом запущу голосовалку.

Скрин того, где это будет отображено на канале, прилагаю

2019 March 11

SK

хай гайз,

На московском Датафесте будет секция за RL, куда приглашаются смелые люди, готовые голосно рассказать о своих агентах, их взлетах и падениях.

Статьи, соревнования, production usage, странные pet projects во славу RL - именно это мы и ищем. Или может вы сумели завести алготрейдинг на RL и готовы раскрыть все свои карты?

Interested? пишите 😉

let's make test env train again!

На московском Датафесте будет секция за RL, куда приглашаются смелые люди, готовые голосно рассказать о своих агентах, их взлетах и падениях.

Статьи, соревнования, production usage, странные pet projects во славу RL - именно это мы и ищем. Или может вы сумели завести алготрейдинг на RL и готовы раскрыть все свои карты?

Interested? пишите 😉

let's make test env train again!

AB

хай гайз,

На московском Датафесте будет секция за RL, куда приглашаются смелые люди, готовые голосно рассказать о своих агентах, их взлетах и падениях.

Статьи, соревнования, production usage, странные pet projects во славу RL - именно это мы и ищем. Или может вы сумели завести алготрейдинг на RL и готовы раскрыть все свои карты?

Interested? пишите 😉

let's make test env train again!

На московском Датафесте будет секция за RL, куда приглашаются смелые люди, готовые голосно рассказать о своих агентах, их взлетах и падениях.

Статьи, соревнования, production usage, странные pet projects во славу RL - именно это мы и ищем. Или может вы сумели завести алготрейдинг на RL и готовы раскрыть все свои карты?

Interested? пишите 😉

let's make test env train again!

А когда будет? Я мб бы про тт-беллмана рассказал

SK

ух, datafest 6 будет 11 мая

AB

Ну пока это в статусе пет проджект)

c

Всем привет!

На этой неделе на семинаре выступит Михаил Ягудин и расскажет про DeepStack.

Четверг, ШАД

Аудитория: Стенфорд

19:00

Нейронная сеть идёт ва-банк 🎲

Я расскажу про DeepStack, алгоритм, обыгрывающий людей в No-Limit Texas Hold-em, известную игру с неполной информацией. Основная идея — использовать Сounterfactual Regret Minimization, чтобы найти смешанную стратегию, приближающую стратегию равновесия Нэша; и использовать нейронные сети в качестве функциональных апроксиматоров.

https://arxiv.org/abs/1701.01724

https://github.com/lifrordi/DeepStack-Leduc

https://www.deepstack.ai/

Приходите, будет интересно!

На этой неделе на семинаре выступит Михаил Ягудин и расскажет про DeepStack.

Четверг, ШАД

Аудитория: Стенфорд

19:00

Нейронная сеть идёт ва-банк 🎲

Я расскажу про DeepStack, алгоритм, обыгрывающий людей в No-Limit Texas Hold-em, известную игру с неполной информацией. Основная идея — использовать Сounterfactual Regret Minimization, чтобы найти смешанную стратегию, приближающую стратегию равновесия Нэша; и использовать нейронные сети в качестве функциональных апроксиматоров.

https://arxiv.org/abs/1701.01724

https://github.com/lifrordi/DeepStack-Leduc

https://www.deepstack.ai/

Приходите, будет интересно!

c

Переслано от cydoroga

Если у вас нет пропуска в ШАД, не забудьте зарегистрироваться по ссылке до 10ти часов утра четверга:

https://docs.google.com/forms/d/e/1FAIpQLSd18PGkZuOqkWThJhmNxnmiSVFicnH4YwLVTCOkEkVQV6ZIDg/viewform

https://docs.google.com/forms/d/e/1FAIpQLSd18PGkZuOqkWThJhmNxnmiSVFicnH4YwLVTCOkEkVQV6ZIDg/viewform

c

ВНИМАНИЕ:

Напоминаю, что у нас все еще свободны слоты на выступление 21го и 28го числа!

Записаться можно по ссылке:

https://docs.google.com/spreadsheets/d/1ULg_NJ8ncDyluvLXmgyX9YiOdKGd4Kh-D6GNWC61Arc/edit?usp=sharing

Выступление помогает по-настоящему разобраться в том, с чем выступаешь, и посмотреть на проблему по-новому.

Напоминаю, что у нас все еще свободны слоты на выступление 21го и 28го числа!

Записаться можно по ссылке:

https://docs.google.com/spreadsheets/d/1ULg_NJ8ncDyluvLXmgyX9YiOdKGd4Kh-D6GNWC61Arc/edit?usp=sharing

Выступление помогает по-настоящему разобраться в том, с чем выступаешь, и посмотреть на проблему по-новому.