JF

Size: a a a

2021 April 06

@pankrashkin правильно

JF

спасибо 🙂 мне нужно на Scala

А

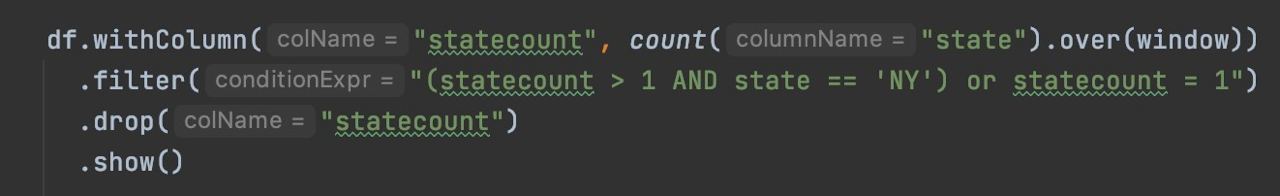

я не особо в скале, так что это может не взлететь сразу:

val cols = spark.read.table(tableName).columns.map(x => col(x))

val res = df.select(cols:_*)

.withColumn("rn", row_number over Window.partitionBy("name", "department").orderBy("name"))

.filter("rn == 1")JF

огонь 🔥 спасибо, отрабатывает. Я немного поменяю.

IS

Это не будет работать, если несколько штатов

Ты берешь рандомный штат тут)

Ты берешь рандомный штат тут)

JF

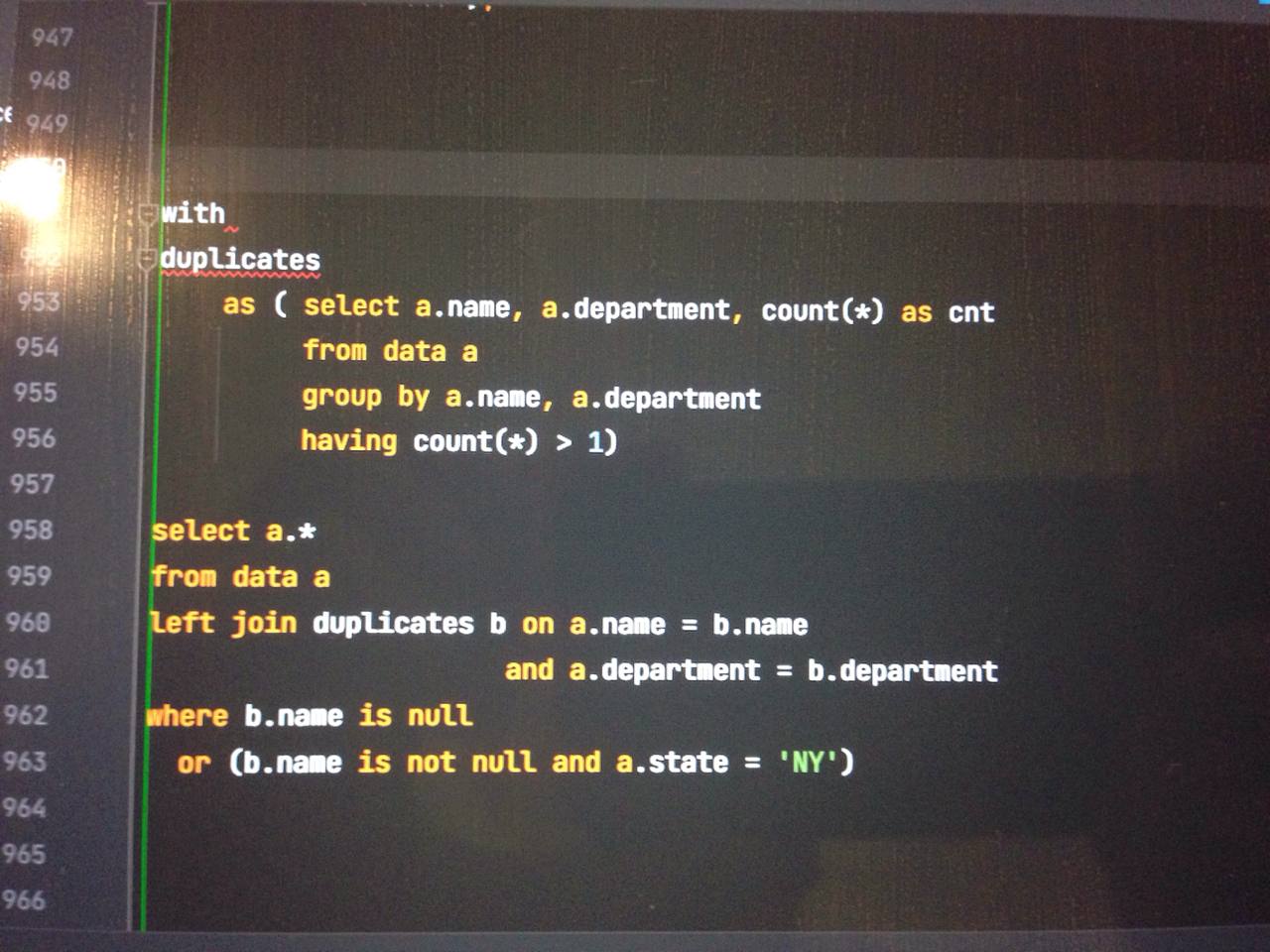

сделала вот так, почему с collect_set запуталась окончательно

JF

спасибо всам за помощь 🙂 суперское комьюнити

2021 April 07

АА

Ребят, вопрос офтоп, могли бы подсказать откуда можно наворовать тэмплейтов react js?( уже гуглил, быть может есть непопулярные сайты/каналы тг)

ЕГ

У нас тут принимаются предложения только от 300к рублей за задание

@pomadchin

@pomadchin

ЕГ

GP

отличные новости!

M

Это где он огонь кстати?

ПФ

имха везде! Прям смотришь на него — и огонь

ПФ

Ну смотря что ищешь, конечно

M

Ищу работу с кубфлоу без юая кубфлоу на онпремисе, пока больно

ПФ

а почему без юая? Там же весь смысл в юае. Надо чтобы не-иненеграм было просто

M

Нужно создавать пайплайны эксперименты всякие и запуски через апи

M

Ну и 500 ошибки рандомные на том же юае выглядят не очень